Generating music with AI (or transformers go brrrr)

💡 Intro:

Recently I take part in music generation contest from Yandex and AI Community. The formulation of the task is simply easy, you are giving a start of some melody, and must to generate a continuation. The winner will choose by human annotator voting.

Okay, let’s get started. 👏

Usually, in musical tasks we are working with some kind of music format like midi, where contains information about when every note starts and ends playing, or raw audio like .wav. But in this contest, the training data contains classical piano music melodies written in musical notes.

Also you can check repo with solution.

🗂 Training and validation data format

Yeah, the model will read notes absolutely like a usual human musician 👨🎤. But in real life music notes are specified by a specific music character 🎵🎶🎵. But how to represent this format on a computer?

For this task exists ABC notation, which is a markup language for the melodies, that contains info about notes bars, speed and others.

This is an example of ABC notation and representation of this ABC in usual notes. At the head of the document, we have got some meta info about this melody: X — reference number, T — title, M — metre, L — note length, K — keynote. Then we see notes of the composition. Vertical lines are the separator of note bars, every Latin symbol with some modifier (comma, number, and other) is a note that’s playing separately, one after another.

But if you ever play in piano, you know that you can bush two or more keys at the same time — it’s names accord. In ABC notation it’s defending as notes inside square brackets, like this [ABC].

The usual brackets make voices group, notes still playing separately, but notes smoothly merge into one another.

More about abc notation you can read there. Also, you can play with abc there, write own melody.

We getting next! 🏃

🔍 More about competition task

In this challenges task is generate 8 continued bars from input 8 bars of melody. Training data contains melodies from the wild and has got a different length, the validation data has got only first 8 bars.

🤖 Transformers

Before we start talking about the task melody generation, lets talk about machine translation.

In the last few years in machine translation the state of the art approach is translation by seq2seq generation by transformer models. I’ll tell about it in simplified format, more about transformers architecture and seq2seq (sequence to sequence) training you can read in this article.

- Firstly we get some text to the input , then you transform input text to the sequence of tokens. Tokens are numbers, thats represent some part of text like words or ngrams.

- We are pushing this tokens into encoding part of Transformer model, and getting hidden representation of input text.

- This hidden representation is pushing to decoding part, thats generating new and new output tokens, based on hidden states and tokens thats was generated before.

- We are converting output tokens into text and getting the output of our translation model.

So, how it is compatible with the music generation tasks? 🤔 Elementary! The input 8 ABC bars is the input of the model, and the generated 8 bars is the output. We simply generate ABC markup text based on input ABC, like in translation task!

I was implement model by HuggingFace Transformers library:

📝 Data preprocessing

Before we start training we must preprocess training data to a clean format.

Read the data 👨💻. Firstly I define ABC reading function, that removes all spaces, tabs and next line symbols, and splits the data to part with keys with meta info and notes.

Data reading is done! What about data cleaning?

🧼 Data Cleaning. Okay, firstly we need to split our set of melodies with different number of bars to set of melodies with 16 bars (8 bars to input and 8 bars to target) at each other. Let’s split them.

Okay, now I tell you some heuristics of cleaning that’s I found.

Removing long silence 🔈. In ABC you can to set long silence by token“x8”, it using for example at the intro. I found, that’s removing silence from data is boosting precision.

Removing bars where input is similar to target 👥. I found, that if removes bars where input and output is similar, model goes generate more diverse melodies. I did it by using levenshatain distance function.

Removing a lot of repeating 🔁. The last one cleaning. I found that’s if I remove samples, where has so many repeating of the same notes at target bars, it’s makes the model more diverse.

Preparation of data is end! That’s was a most harder part, let go to train e model.

📇 Tokenising

In the translation task description, we mentioned tokenization. How we can do that?

Off-course we can develop a complicated algorithm, that was split music notes by ABC parsing rules (for example split by separate notes and accords), but we can get some side-effects. Firstly some accords will be very rare, and the model cant to learn embedding vector for that, or in the validation set we can saw some accord that’s model newer seen before. Also, we can train model char by char, but it is so hard, because the output sequence is long, and it will difficult to learn.

Our Messiah is BPE! Byte Pair Encoding is an algorithm that’s splitting text into some ngrams. Ngrams choosing from dictionary, that’s trains on train text corpus and select from this corpus top-K most popular Ngrams. With this algorithm, we can represent the most popular accords by only one token, and rare tokens by a combination of ngrams.

For tokenization, I used YouTokenToMe, the fastest tokenizer ever. Train own tokenizer model you can just in a command line

# Train corpus - is text file thats

# contains all of concatenation ABC files in plain-text

yttm bpe --data train_corpus.txt --model abc.yttm --vocab_size 3000Then in data reading code we just tokenise text like that

🚂 Training loop

HuggingFace library has existing loop for training seq2seq models. We just need to implement collocation function thats will build batch from list of samples, dataset and model objects, also you must to set some training parameters, like batch size, numbers of epoch and e.t.c.

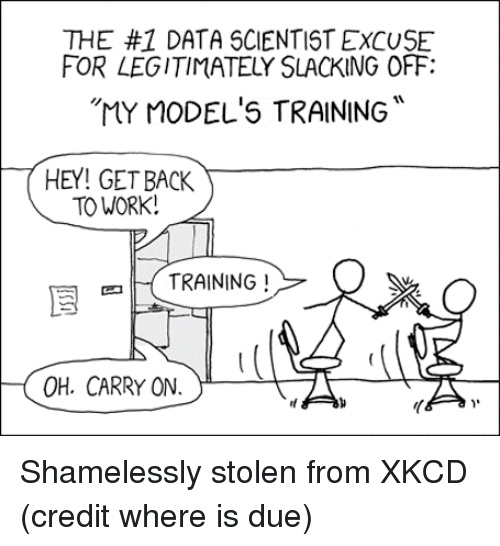

Yes, thats all! You can rest now, until your model ends training.

You can sleep, play Cyberpunk, married, sleep again, drink coffee, build a house and other and other. I trained my model on my Tesla V100 x6 in 6 days with 300 epoch, batch size of 15 and gradient accumulation steps 20.

⚙️ Generation stage

So, now our model is already trained, woohoo! 🎉

Let’s generate a sample! But before i want to tell you about BeamSearch. We train model to predict most probably token on every step, and we can just sample token with highest probability at every step on generation stage, right? Yes and no… This type of sampling names greedy sampling, and it is not always the best approach. We wants to get the sequence of tokens with maximum probability, the probability of sequence {x_1, x_2, x_3, …, x_n} defines as p(x1) * p(x2) * p(x3) … * p(xn), where p(x_i) — is probability of token x_i.

Instead of select only one top token, lets select top-N tokens, and starts parallel generation from there. But you can notice, that asymptotic of this algorithm is exponentially growing. If sequence will very long, a generation time will long like the existence of the earth. To avoid this, we continue to generate only N sequences with highest probability. We repeating this until end token (EOS).

More about sequence sampling you can read there.

The code of generation:

🔊 Samples

Okay, we are gets some results of generation. How to convert generated ABC to audio files to listen this? I used abc2midi tool.

abc2midi input.abc -o out.midi🏅 Contest results

Contest validation based on an analog of Turing test, the annotators gets two melodies: original melody and melody half part of them is generated.

The winner selected by, how often humans prefer the melody selected by the winner algorithm than the original song.

I did not win in this contest, because my model sometimes gets the wrong generation, like repeating, or wrong notes. I think that’s is so depends on data and training tricks. For example, to avoid repeating you can use unlikelyhood training.

The winner was some configuration of just repeating of input bars ¯\_(ツ)_/¯. Anyway, that was been a cool experience in sequence to sequence generation contest 😎