How to build AutoML from scratch

I’m Alex, I’m 17th y.o. data-scientist from Russia, study at school, 11th grade.

Recently I took part in Sberbank Data Science Journey AutoML-Competition and won the 5th place on the private leaderboard. In this article I will tell about my AutoML-solution for this competition and why I did not get $3k awards.

Machine learning is the most common technology for solving many tasks for the last 5 years. Machine learning algorithms are used in the applications of spam filtering, detection of network intruders, and computer vision, where it is infeasible to develop an algorithm of specific instructions for performing the task.

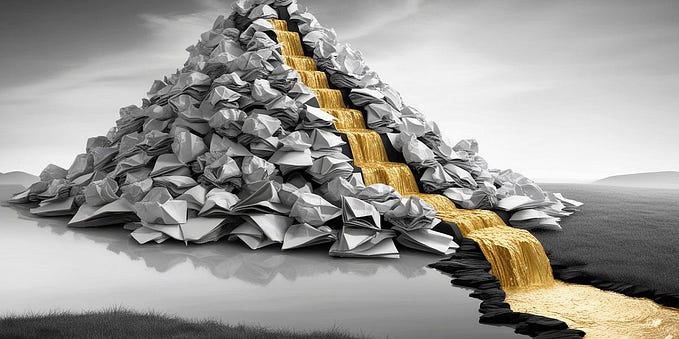

Classical ML approach works like shown in the picture: We have some amount of data, data scientist does features engineering (generates new features from the data, selects the most important features) after that he trains a ML-algorithm on train set and evaluates it on test set. This work is reaped while we don’t get pretty accuracy for this task.

AutoML approach is different, in this approach we don’t need a human. Because program makes feature engineering, model trains by automate. AutoML is open domain — we can run the same algorithm on any type of data like credit scoring, sales stock, text classifications, and others.

AutoML allows saving money and human resources, because of this solution scalable to many tasks, you can single algorithm for any task. Off course, a human can achieve a better accuracy, but such solution is less scalable, takes more time and specialist analyst is needed.

AutoML in production

Many peoples believe that AutoML is just an area of scientific research and experiments, that has nothing in common with production. But it is not true. AutoML is an evolving technology area, many companies are creating own AutoML solutions and tools, that you can use in your projects right now.

Let’s watch to some of them.

H2O AutoML

H2O is an open source, in-memory, distributed, fast, and scalable machine learning and predictive analytics platform that allows you to build machine learning models on big data and provides easy productionalization of those models in an enterprise environment.

Productionalization — is the main difference H2O from other frameworks, it means you can develop your model and features in this framework, and then easily integrate it into production environment like Kafka, Spark, Storm, e.t.c. Thereby you can deploy model on clusters for processing big data flow.

H2O environment has got API integration with different platforms like Java, Scala, R, Python etc.

REST API deserves special attention because it means you can use H2O as web-service for ml with docker and make some HTTP-requests from other microservices.

H2O has integration with different storage platforms, and you can easily connect SQL database, Hadoop Distributed File System (HDFS) or S3 storage to your ML-pipeline.

H2O.AutoML is an easy-to-use toolkit for AutoML that include H2O framework. This toolkit allows making machine learning models with using all power of H2O framework without any knowledge just in few lines of code.

H2O provide to use in your ML pipeline many different algorithms like XGBoost, H2O GBM, Neural Networks and other. The framework can automatically search optimal hyperparameters by greed search. Also, H2O can ensemble your models into a stacked ensemble, that can approve accuracy on your task.

H2O also provide train AutoML pipeline by Spark, it’s allowed to use a cluster for training many different models.

This is a simple example of using Auto ML, here we download some dataset and run basic AutoML pipeline.

After that H2O trains many different models and scores it on 5-folds cross-validation, also creates ensembles of top models.

A developer can use the top model from the leaderboard to predict values for the test set. Also, he can tune some parameters like stopping metric, sorting metric, n_folds, columns weights, time limit etc.

Azure automated machine learning

Azure AutoML — cloud toolkit from Microsoft for using AutoML in Azure Cloud. You can use Automated ML in Azure Notebook.

You just need to create an experiment with some parameters: task, dataset, the blacklist of algorithms, n_folds and other, next run your experiment. After that black-magic of AutoML creates and fits the model for your task.

You can read more about Azure AutoML here.

Google AutoML

Google AutoML — cloud base AutoML-as-a-service. You can use Google Cloud to build your own classifier of photos or text by drag & drop.

You need to upload your dataset to the platform. After that Google will train model for your task, and then you can use it by Cloud.API. It’s the simplest way to use ML in your project.

Other projects

On GitHub you can find so many different AutoML projects:

- Nevegrad — Facebook derivative-free parameters optimization

- NNI — Microsoft toolkit to help run AutoML experiments on any neural network framework, like Pytorch, CNTK, Keras and etc.

- AutoKeras — Toolkit to making AutoML in Keras in some count line of code

- auto-sklearn — Toolkit to making AutoML in skleran in some count line of code

- TPot — Toolkit that making AutoML using sklearn and Xgboost models and tune it by genetic algorithm.

- And other

Competition

SDSJ AutoML is a competition of AutoML systems, developers was challenged to create their AutoML software and upload it into contest platform, where it will train and evaluate.

This competition is different than classical Kaggle competition, you must send your code in docker container, then the code runs on a server, trains model on closed datasets and tests it. It’s similar to ACM ICPC competitions because you send code instead of CSV file.

Competitions dataset is closed and developers can’t see it. The model evaluates on real bank’s data.

The program receives at the entrance input train or test dataset name, task type (binary classification or regression) and calculation time limit. A program must train ML-model for a given time on a train set, and then make a prediction for a test set for the same time.

Input data format

Input dataset is CSV-table, program get train

Input dataset have some columns:

- line_id — Line identificator

- target — target value (only for train set), real number for the regression task or 0/1 for the classification task.

- <type>_<feature> — input feature of some datatype (type):

Feature data types:

- datetime – date in this format: 2010 – 01 – 01 or data and time in format: 2010 – 01 – 01 10:10:10

- number — real or integer number (also can contain categorical feature)

- string — string feature (names or text)

- id — identificator (like categorical feature)

Metrics

For every task (dataset) system compute a specific metric for the task (RMSE for regression, ROC-AUC for binary classification).

For every task, you get some score from one to one. 0 — for models that gave precision like baseline or less, 1 — for the best solution in this task. After that, all scores for all task is summed up.

My solution

Feature engineering

Interesting fact — I did not use any hardware power except my Macbook. All solution realized in several python scripts. After adding any new feature I testing and debugging it on small datasets.

After that, I push my code to the platform and look up how some features change my score.

I tried to implement the classical algorithm of data-scientist work. The big challenge was that sometimes we get big dataset or low time limit and our behavior must change.

After some iterations of implementing and testing some features I got my final data preprocessing and model training pipeline.

Dataset preparation

- If the dataset is big (>2GB) then we calculate features correlation matrix and delete correlated features

- Else we make Mean Target Encoding and One Hot Encoding.

- After that, we select top-10 features by coefficients of the linear model (Ridge/LogisticRegression)

- We generate new features by pair division from top-10 features. This method generates 90 new features (10^2–10) and concatenates it to the dataset.

Model training

- If the dataset is small then we can train three LightGBM models by k-folds, after that blend prediction from every fold.

- If the dataset is big and the time limit is small (5 minutes) then we just train linear models (logistic regression or ridge)

- Else we train one big LightGBM (n_estimators=800)

The source code of my solution you can find in my GitHub.

Results

In final leaderbord I get 5th place. WOHOOO!

Awards 🏆

All winners were awarded on Sberbank Data Science Journey — global machine learning festival from Sberbank.

Winners got many prizes like hoodies, hats, cups, powerbanks and other, also they get some money 💸:

- 1 Place — 1M rubbles ($15k)

- 2 Place — 500k rubbles ($7.5k)

- 3 Place — 300k rubbles ($4.3k)

- 4–5 place — 200k rubbles ($3k)

- 6–10 place — 100k rubbles ($1.5k)

- Also, top3 best GitHub solutions got 100k rubbles ($1.5k)

On the private leaderboard I was in 5th place, but because I am under 18, I performed out of the competition without money-awards.

Conclusion

It was a very interesting and valuable competition because I never took part in machine learning competitions, where you need to send code instead CSV submit. It allows not to use many computational resources because your solution is limited of resources on contest platform and you can’t create a big stacked ensemble of models.

I think that machine learning competitions must transform from Kaggle-like competitions to 🐋 docker-like.

Also, AutoML is a really interesting area, because you can apply machine learning algorithms in real production without any knowledge about it. It opens DataScience for many developers, and allow them to create many interesting projects. I hope, that companies like H2O, Google and Microsoft will continue to develop new tools for AutoML.